Case Study: Monitoring Brand Safety with the GARM Brand Risk Taxonomy

The Global Alliance for Responsible Media (GARM) made headlines earlier this month, after an antitrust lawsuit from Elon Musk forced the non-profit consortium of advertisers and digital platforms to shut down.

Prior to its untimely demise, GARM was known mostly to people in media and advertising for providing standards and best practices around illegal and harmful digital content. By helping advertisers understand where their ads appear, GARM's resources allowed them to avoid associating their brand with illegal and harmful content like terrorism, hate speech, and misinformation.

Though GARM is no longer with us, their resources and toolkits live on, and continue to be valuable to advertisers and publishers worried about brand safety. In particular, the GARM brand risk taxonomy is a common starting point for labeling content that may be problematic for ad placement. In this post, we'll dive into the GARM taxonomy, and show how you can use Taylor to tag content with risk labels at scale.

GARM Brand Risk Taxonomy

GARM divides potentially-problematic content into 12 categories:

- Adult & Explicit Sexual Content

- Arms & Ammunition

- Crime & Harmful Acts to Individuals and Society and Human Right Violations

- Death, Injury or Military Conflict

- Online Piracy

- Hate Speech & Acts of Aggression

- Obscenity and Profanity

- Illegal Drugs/Tobacco/e-Cigarettes/Vaping/Alcohol

- Spam or Harmful Content

- Terrorism

- Debated Sensitive Social Issues

- Misinformation

Each type of risky content can additionally be subdivided into risk levels. This allows publishers and advertisers to differentiate between anodyne content related to a category (e.g. a New York Times feature on ISIS), vs. extremely harmful content (ISIS recruiting videos). "Low Risk" and "Medium Risk" typically include informative and educational content, as well as entertainment. "High Risk" content is typically either irresponsible (e.g. glamorizing underage consumption of alcohol) or not appropriate for all audiences (full nudity), but might be acceptable for some audiences. Finally, "Safety Floor" defines content that no advertiser should be comfortable with (such as child pornography, sale of illegal drugs, and incitement of violence).

By using this taxonomy, publishers and advertisers can speak a shared "language"—advertisers can set standards for the content they're willing to place ads alongside, and publishers can ensure that the content they produce aligns with these standards. A shared taxonomy can also facilitate selling and re-selling of ad space in marketplaces on ad exchanges and demand-side platforms.

Labeling Content with Taylor

Now that we've discussed the usefulness of a standardized risk taxonomy, we'll explore how you can get started labeling your content. The old-school way is to manually tag content—many publishers still have content creators or editors add keywords and tags to everything they produce. But for brand safety purposes, hand-labeling introduces a lot of subjectivity, and a lot of work consulting the GARM guidelines. This is especially true for publishers that want to label their entire archive.

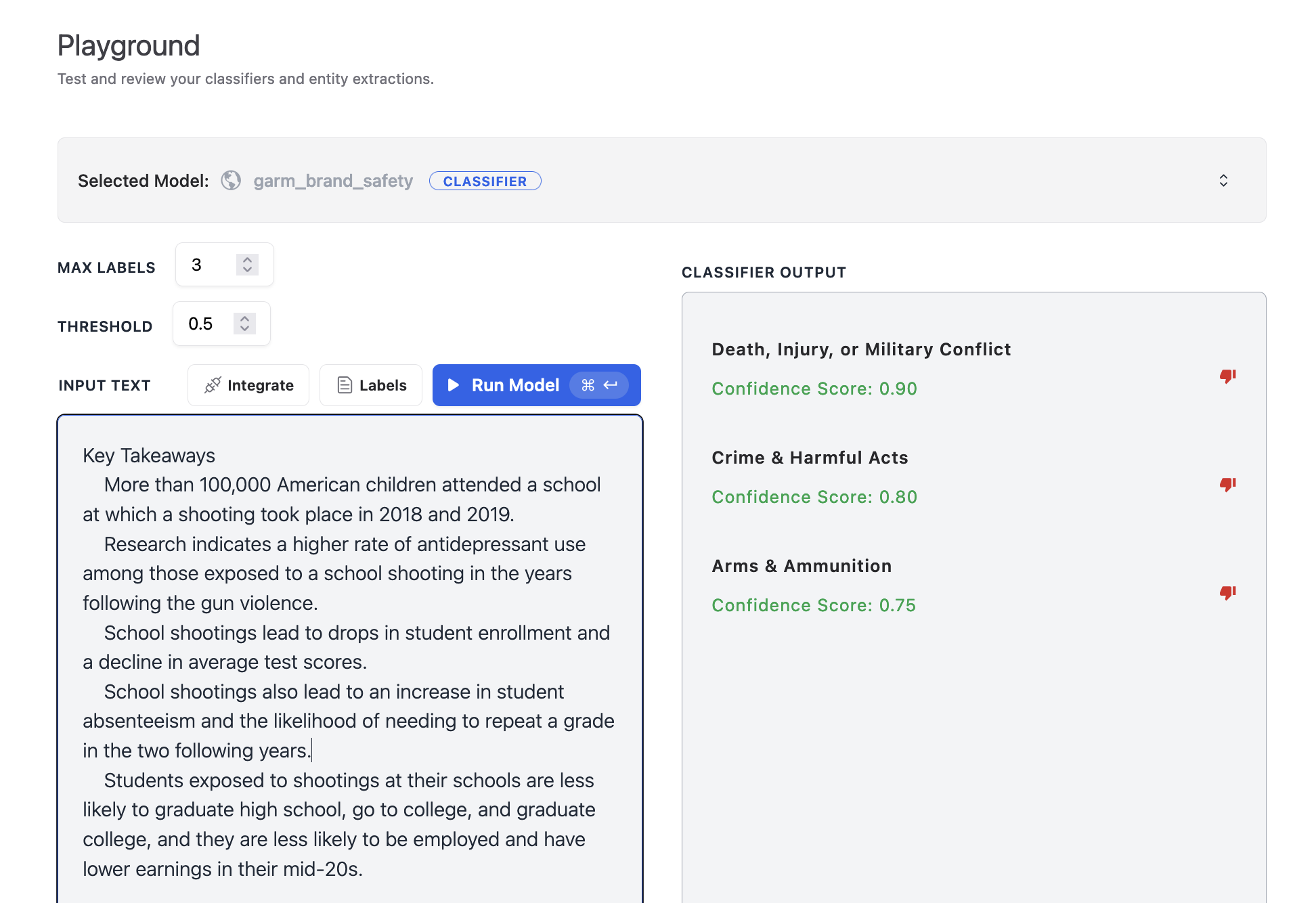

Instead, automated solutions can categorize hundreds or thousands of pages in minutes. At Taylor, we recently released a brand safety model that automatically labels content with the appropriate GARM labels. By using our interactive Playground (opens in a new tab), anyone can test out the tool, with no technical background required. Below, you can see the risk categories surfaced for a potentially-risky passage about school shootings:

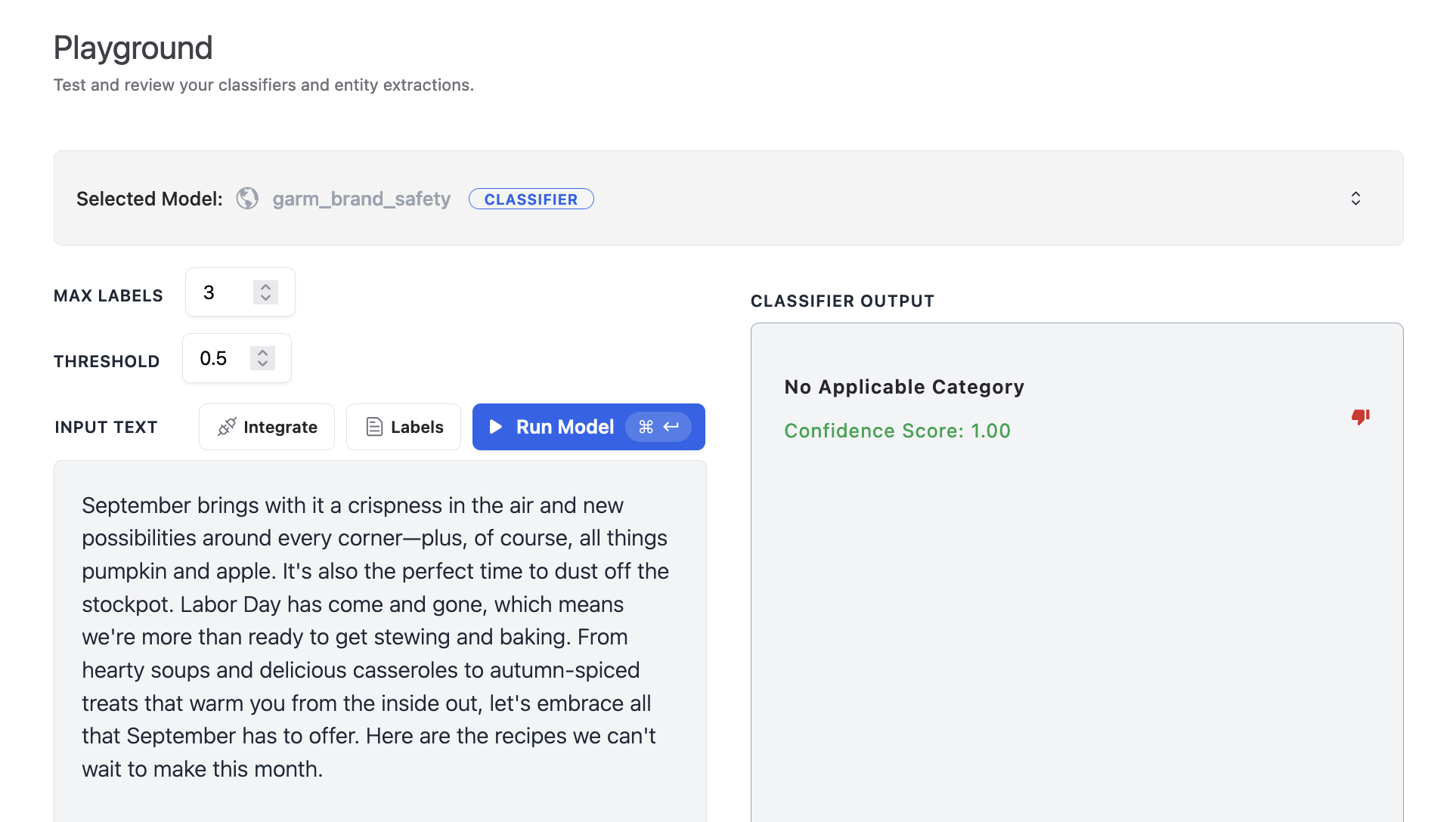

And then, for a harmless input about seasonal recipes, our model returns "No Applicable Category".

All users can test our platform on up to 1,000 examples for free. When it's time to scale up the model to an entire library of content, a software engineer use our API to classify content in real-time. Or, anyone can use our web application to upload a large spreadsheet and classify thousands of pages in one go.

Conclusion

Monitoring brand safety is crucial for advertisers and publishers alike. In this post, we discussed how the GARM brand risk taxonomy enables a standardized framework for assessing content risk, enabling businesses to make informed decisions about ad placement. Then, we showed how to leverage Taylor's brand safety model to efficiently label and categorize large volumes of content. In the future, we're excited to share more powerful tools for advertisers and publishers to tag content at scale. If you want to learn more or partner with us for your classification use case, please reach out (opens in a new tab)!